What is a Rate Limiter ?

Rate limiting as the name suggests, refers to preventing the frequency of an operation from exceeding a defined limit.

Imagine we have a service which is receiving a huge number of requests, but it can only serve a limited number of requests per second. To handle this issue, we need some kind of rate limiting (throttling) mechanism that will allow only a certain number of requests based on the configuration which our service can handle/respond. A rate limiter, thus limits the number of events an entity(user, device, IP etc.) can perform in a particular window/time-frame.

In large-scale systems, rate limiting is commonly used to protect underlying services and resources. Rate limiting is generally used as a defensive mechanism in distributed systems, so that shared resources can maintain availability.

Why Rate Limiter?

Eliminate spikiness in traffic : Prevent intentional/unintentional traffic by some entities, sending a large number of requests.

Security : By limiting the number of requests/tries for passwords, we can protect the services from unauthenticated users/hackers using brute-force.

Prevent resource starvation : The most common reason for rate limiting is to improve the availability of API-based services by avoiding resource starvation. Load based denial of service (doS) attacks can be prevented if rate limiting is applied. Other users are not starved even when one user bombards the API with loads of requests.

Prevent bad design practices and abusive use : Without API limits, developers of client applications would use sloppy development tactics, for example : requesting the same information over and over again.

Different Types of Rate Limiter

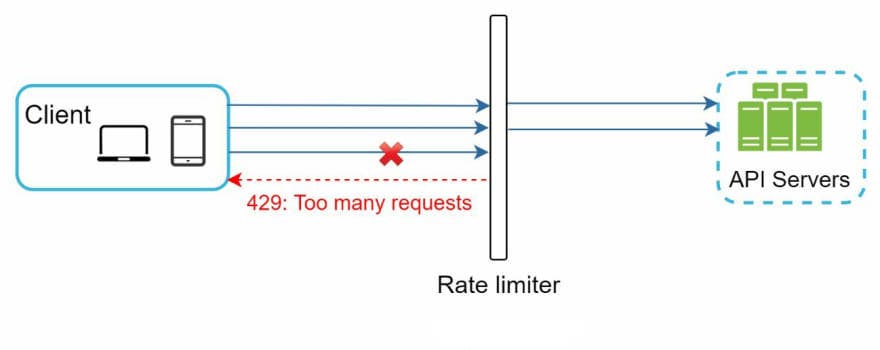

Server Side Rate Limiter

Implemented on server(provider) side to protect endpoints from overloading/excessive use and Denial of Service (DoS) attacks.

A limit is set on how many requests a consumer is allowed to make in a given unit of time/window.

Any requests above the limit is rejected with an appropriate response like HTTP status 429(too many requests)

Rate limit is specified in terms of requests per seconds(rps), requests per minute(rpm) etc.

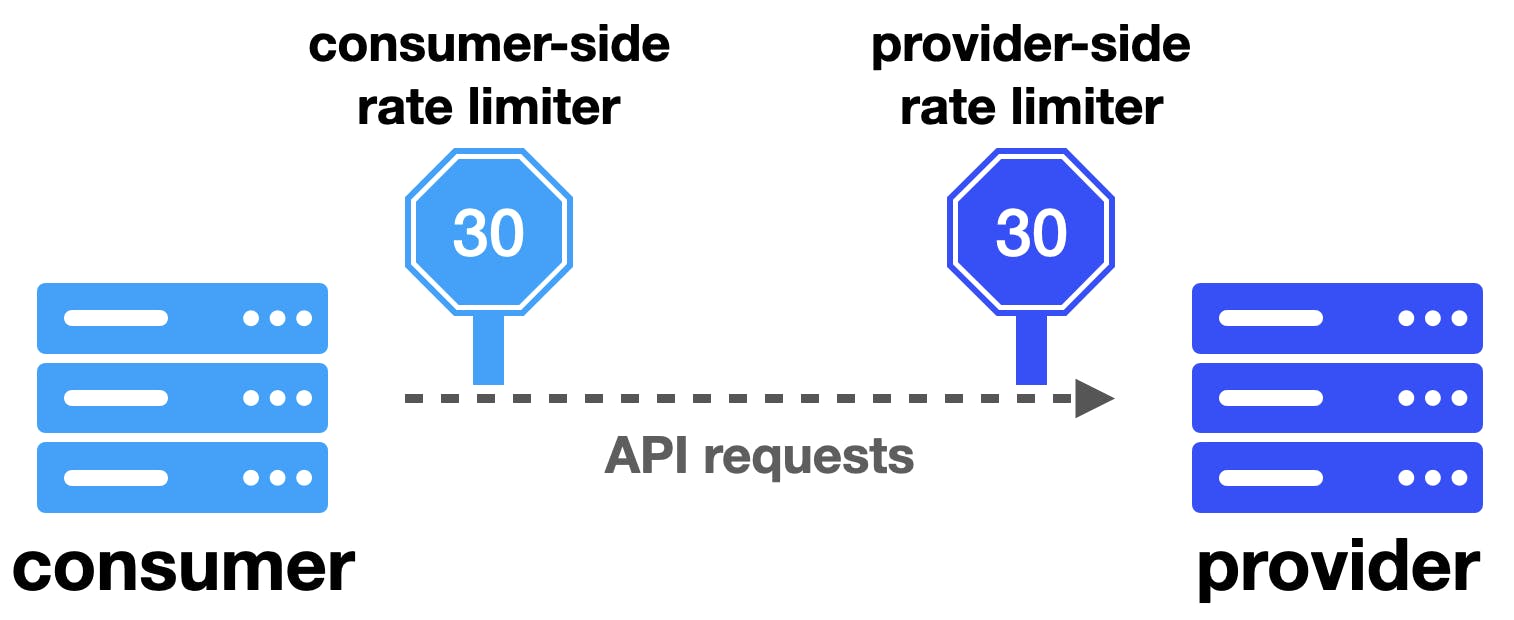

Client Side Rate Limiter

Implemented on client(consumer) side to ensure we are not overloading the service provider/upstream.

Used to call upstream APIs in rate limited way and handle the throttled APIs according to usecase.

Even though (consumer)client-side and (provider) server-side rate limiters are similar in concept, there are some differences. The main difference is in the behaviour when the rate limit is reached. While server-side rate limiter implementation often just rejects requests, client-side implementation have a variety of approaches they can use :

Wait until it is allowed to make requests again. This approach makes sense in applications that make requests as a part of asynchronous processing flow.

Wait for a fixed time period and timeout the request if the rate limiter still does not let it through. This approach would be helpful for systems that directly process user requests and depend on downstream API which is rate limited. In such a situation it is only acceptable to block for a short period of time, which is often defined based on the maximum latency(in Service level Agreement) promised to the user.

Just cancel the request. This approach makes sense in application where it is not possible to wait for the the rate limiter to allow a request to go through or processing each request is not critical for the application logic.

Conclusion

Rate limiting not only secure your application from attack but also make sure your application is available all the time. There are different rate limiting algorithms that can be used on server/client side based on our use-case.

We will dive deep into the rate limiting algorithms with their advantages and disadvantages in the upcoming blogs. Till then, Stay tuned ! :D