Virtualisation and Containers are the talk of the town which fit into our modern cloud native way of building applications. Lets break down all the jargons around the same and understand them one by one.

Virtualisation

Virtualization is the process in which a system singular resource like RAM, CPU, Disk, or Networking can be ‘virtualized’ and represented as multiple resources.

In virtualization, a piece of software behaves as if it were an independent computer. This piece of software is called a virtual machine, also known as a ‘guest’ computer. (The computer on which the VM is running is called the ‘host’) The guest has an OS as well as its own virtual hardware.

The number of VMs that can run on one host is limited only by the host’s available resources. The user can run the OS of a VM in a window like any other program, or they can run it in fullscreen so that it looks and feels like a genuine host OS.

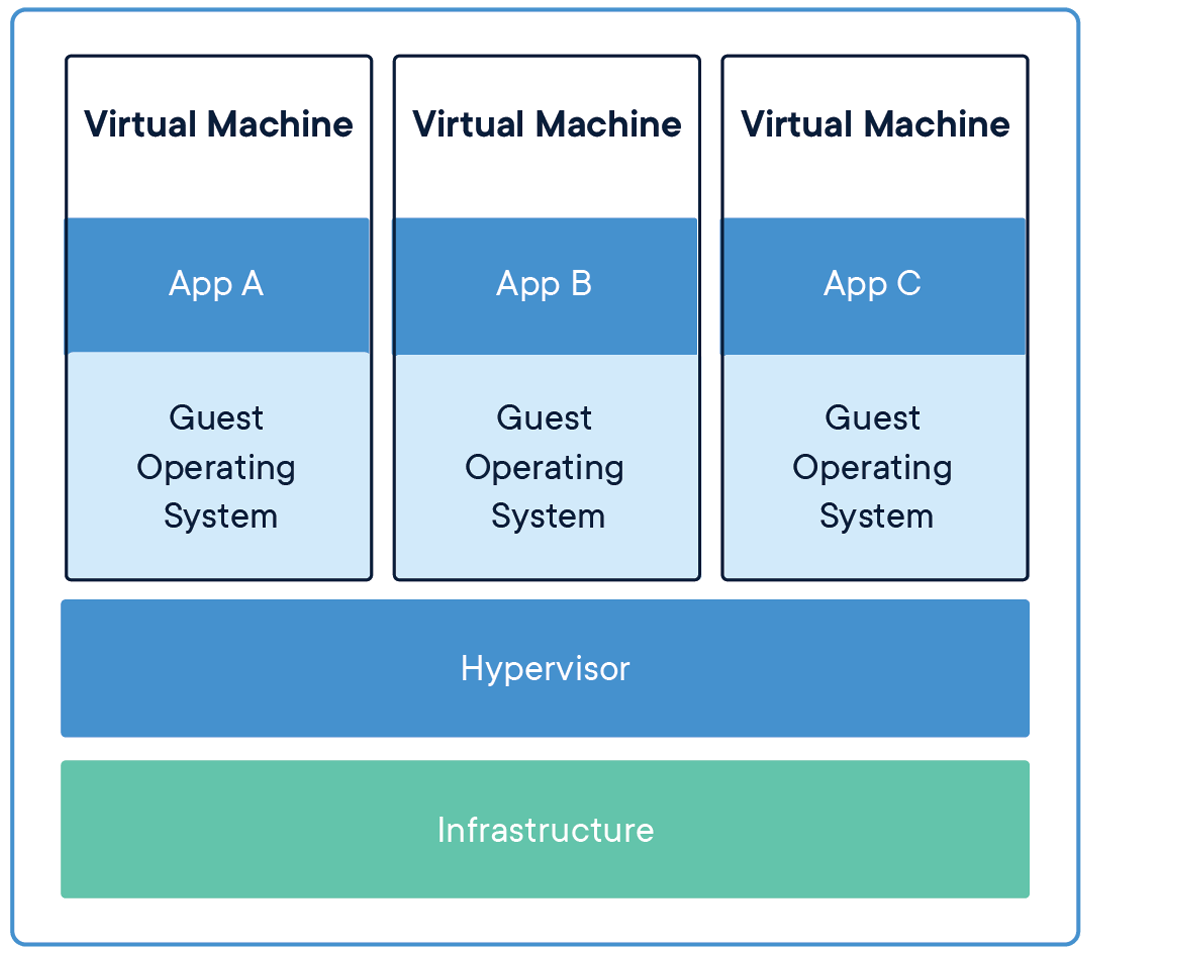

Virtual Machine

Virtual machines are heavy software packages that provide complete replica of low level hardware devices like CPU, Disk and Networking devices.

A virtual machine (VM) is a digital version of a physical computer and is no different than any other physical computer like a laptop, smart phone or server.

Virtual machine software can run programs and operating systems, store data.

They have a CPU, memory, disks to store our files and can connect to the internet if needed. While the parts that make up our computer (called hardware) are physical and tangible, VMs are often thought of as virtual computers or software-defined computers within physical servers, existing only as code.

They run on a physical machine and access computing resources from software called a hypervisor. The hypervisor abstracts the physical machine’s resources into a pool that can be provisioned and distributed as needed, enabling multiple VMs to run on a single physical machine.

A VM is a virtualized instance of a computer that can perform almost all of the same functions as a computer, including running applications and operating systems. It is like creating a computer within an already existing computer.

Eg: Java Virtual Machine (JVM) allow any system to run Java applications as if they were native to that particular system.

Container

Container is a standard unit of software that packages up code and all its dependencies.

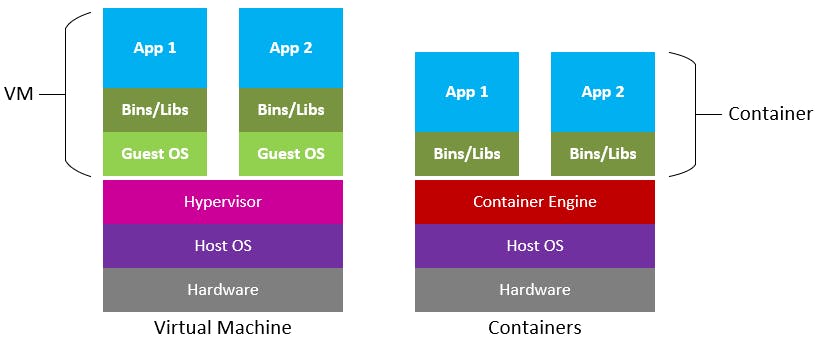

Containers effectively virtualise the host operating system (or kernel) and isolate an application’s dependencies from other containers running on the same machine. Before containers, if we had multiple applications deployed on the same virtual machine (VM), any changes to shared dependencies could cause strange things to happen—so the tendency was to have one application per virtual machine.

The solution of one application per VM solved the isolation problem for conflicting dependencies, but it wasted a lot of resources (CPU and memory). This is because a VM runs not only our application but also a full operating system that needs resources too, so less would be available for our application to use.

Containers solve this problem with two pieces: a container engine and a container image, which is a package of an application and its dependencies. The container engine runs applications in containers isolating it from other applications running on the host machine. This removes the need to run a separate operating system for each application, allowing for higher resource utilisation and lower costs. It eventually allows you to run multiple containers simultaneously on the same host.

Virtual machines virtualize an entire machine down to the hardware layers and containers only virtualize software layers above the operating system level

Different container share the same Operating System(OS) but it appears to each container as if they are using their own OS. Each container only has dependent libraries, code needed.

Docker container image is a light weight standalone executable package of software that includes everything needed to run an application.

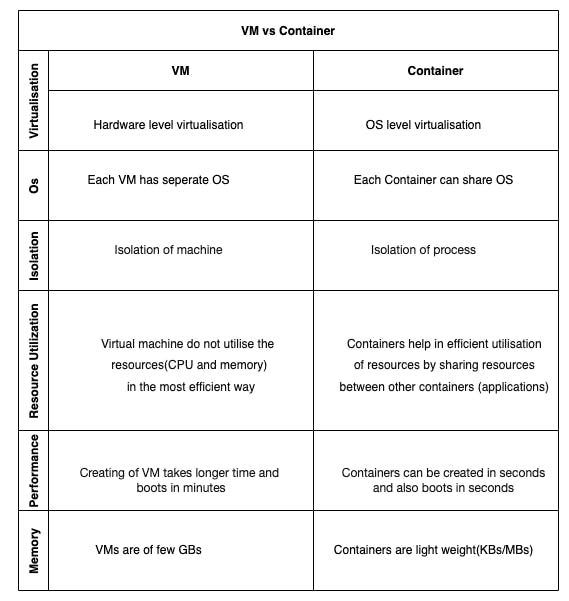

Container vs VM

Container mainly help in OS level virtualisation as opposed to VMs which did only Hardware level virtualisation. However there are some other differences among the two.

Docker

Docker is a container platform system that basically allows to create a container. It provides the ability to package and run application in loosely isolated environment called as a container. The isolation and security allow us to run many containers simultaneously on a given host.

In other words, Docker is a software that understands the world view definition, does the necessary setup(yum/apt installs), packages it(creates equivalent of .class file).Docker is not a container but a platform that reads the container view and starts container process(building and managing container).

It describes how to build image similar to how maven(or gradle) describes how to build an application.

Docker actually resolves the cult problem of every development team – “It works on my machine…!!”.

Docker Image

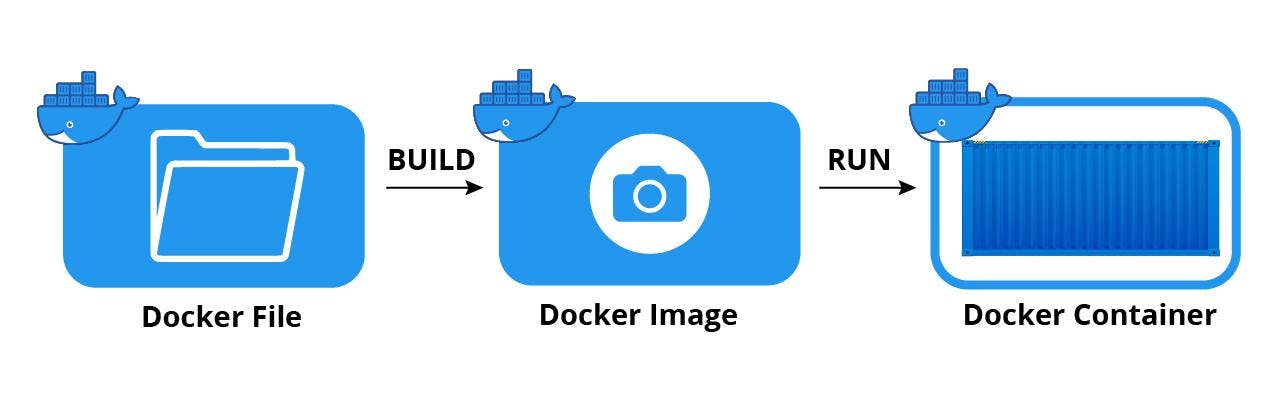

A Docker image is a read-only template that contains a set of instructions for creating a container that can run on the Docker platform. It provides a convenient way to package up applications and preconfigured server environments, which we can use for our own private use or share publicly with other Docker users. Docker images are also the starting point for anyone using Docker for the first time.

It is made up of a collection of files that bundle together all the essentials – such as installations, application code, and dependencies – required to configure a fully operational container environment. We can create a Docker image by using a Docker File.

Docker File

DockerFile is a declarative definition of what process need, can/cannot do, which provides the specifications for creating a Docker image and commands to start the process etc.

A Docker image is a template; a Docker container is a running instance of that template.

# Use the official Ubuntu 18.04 as base

FROM ubuntu:18.04

# Install nginx and curl

RUN apt-get update &&

apt-get upgrade -y &&

apt-get install -y nginx curl &&

rm -rf /var/lib/apt/lists/*

Docker engine(platform) reads this Docker file and builds an image. Docker file defines the following:

- How to build our container

- How to run our container.

- What libraries are necessary

- What steps to take to build our container

Kubernetes

Kubernetes(K8s) is an open source container orchestration(management) framework which starts our container.

Kubernetes provides the following:

Compute scheduling — It considers the resource needs of our containers, to find the right place to run them automatically.

Self-healing — If a container crashes, a new one will be created to replace it without any downtime ensuring expected number of replicas(instances) are running all the time.

Horizontal scaling — By observing CPU or custom metrics, Kubernetes can add and remove instances as needed based on maximum replica count.

Vertical scaling — By observing CPU or custom metrics, Kubernetes can scale up the memory/CPU requests as needed.

Volume management — It manages the persistent storage used by our applications.

Service discovery & load balancing — IP address, DNS, and multiple instances are load-balanced.

Automated rollouts & rollbacks — During updates, the health of your new instances are monitored, and if a failure occurs, it can roll back to the previous version automatically.

Secret & configuration management — It manages application configuration and secrets.

Conclusion

Containerization and Container are the foundation of modern applications that is enjoying huge popularity in the tech world – and Docker is a renowned player of it. Docker provides the toolset to easily create container images of our applications with Kubernetes giving us the platform to run and maintain it all.

I hope this article helped you in demystifying the jargons around virtualisation, container, dockers etc. Thankyou for your time. Stay tuned for more such articles !