Introduction

In our previous article on Rate-Limiter we learnt about the basics, need and different types of rate limiter. In this article we will look into the different rate limiting algorithms we can use to limit how often each user/entity can call the API with its benefits and drawbacks. Let’s review each of them so we can pick the ideal rate limiting design option for our API needs.

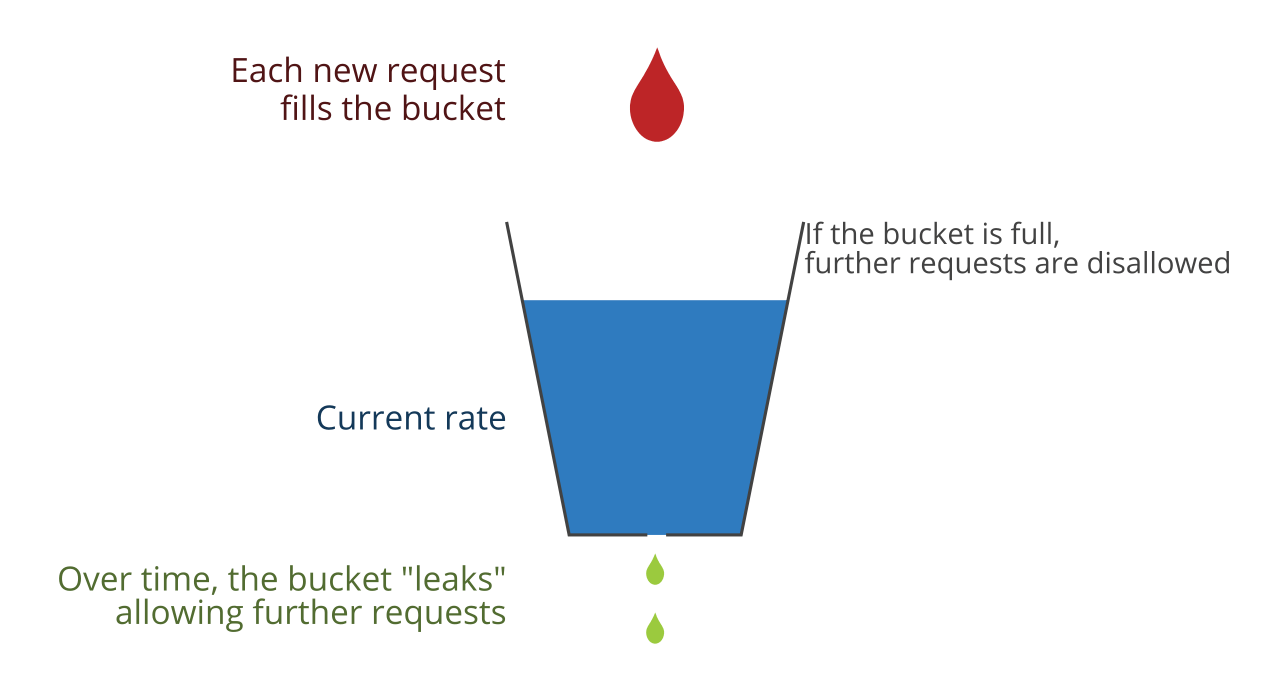

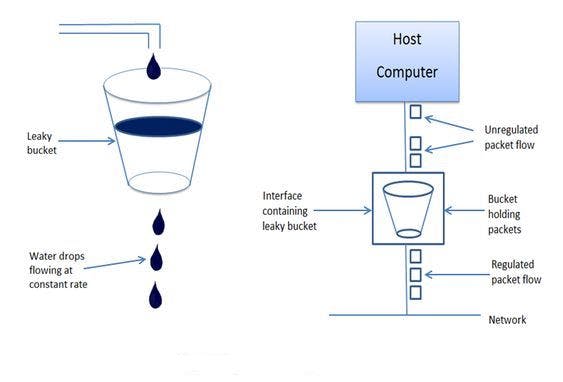

Leakey Bucket

- Leaky Bucket is a simple intuitive algorithm to rate-limit using a queue with a finite capacity.

- Bucket holding the requests.

- When registering a request, the system appends it to the end of the queue.

- Processing for the first item on the queue occurs at a regular interval or first in, first out (FIFO).

- All requests in a given time frame beyond the capacity of the queue(if queue is full) are spilled off(or leaked)

Advantages

- Smoothens out bursts of requests and processes them at a constant rate.

- Easy to implement on a load balancer and is memory efficient for each user.

- A constant near uniform flow is maintained to the server irrespective of the number of requests.

Disadvantages

- Burst of requests can fill up the bucket leading to starving of new requests.

- Provides no guarantee that requests get completed in a given amount of time.

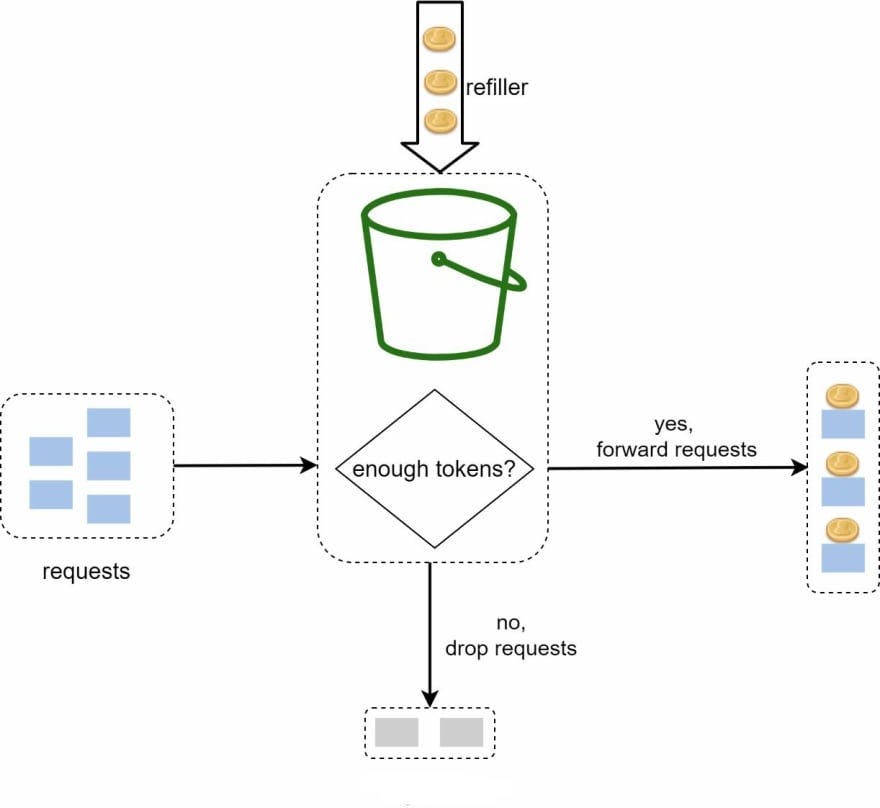

Token Bucket

- Token Bucket is similar to leaky bucket.

- We assign tokens on a user level.

- For a given time duration d, the number of request r packets that a user can receive is defined. Every time a new request arrives at a server, the current number of tokens for that corresponding user is fetched and then throttling is decided based on token count.

- Tokens are refilled only when there is a different(next) minute.

Advantages

- This algorithm is memory efficient as we are saving less amount of data per user for our application.

Disadvantages

- Can cause race condition in a distributed environment. This happens when there are two requests from two different application servers trying to fetch the token at the same time.

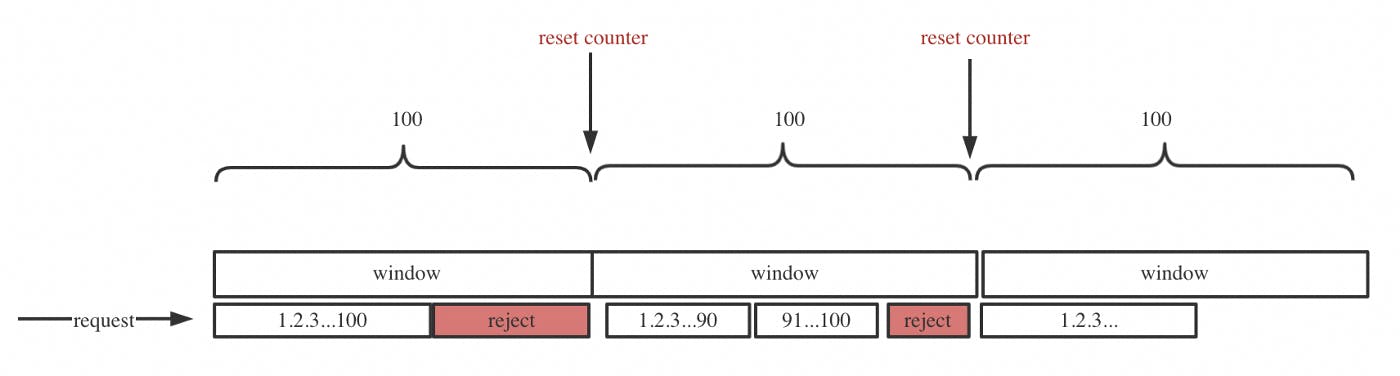

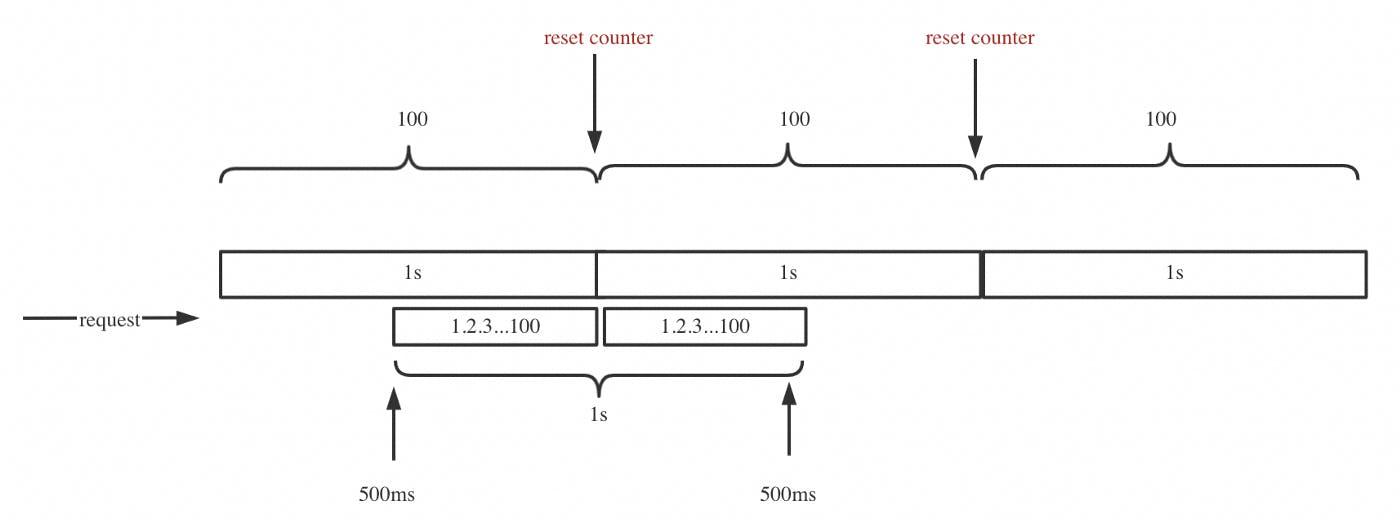

Fixed Window

- One of the most basic rate limiting mechanisms.

- Keep a counter for a given duration of time, and keep incrementing it for every request we get. Once the limit is reached, we drop all further requests till the time duration is reset.

- Time window is considered from start of time-unit to the end of time-unit.

Advantage

- Ensures that most recent requests are served without being starved by old requests.

Disadvantages

- Single burst of traffic right at the edge of the limit might hoard all the available slots for both the current and next time slot leading to bursting problem

- Consumers might bombard the server at the edge in an attempt to maximise number of requests served as we are resetting the StartTime at the end of every minute potentially allowing twice number of requests per minute.

- In a distributed environment, "read-and-then-write-behaviour" can create a race condition.

Sliding Log

- Involves maintaining a time stamped log of requests at the user level.

- The system keeps these requests time sorted in a Set or a Table.

- Discards all requests with timestamps beyond a threshold. Every minute we look out for older requests and filter them out. Then we calculate the sum of logs to determine the request rate. If the request would exceed the threshold rate, then it is held, else it is served.

Advantages

- Does not suffer from the boundary conditions of fixed windows.

- Enforcement of the rate limit will remain precise.

- Since the system tracks the sliding log for each consumer, you don’t have the stampede effect that challenges fixed windows.

Disadvantages

- Can be costly to store an unlimited number of logs for every request.

- Also expensive to compute because each request requires calculating a summation over the consumer’s prior requests, potentially across a cluster of servers.

- Not scalable to handle large bursts of traffic or denial of service attacks.

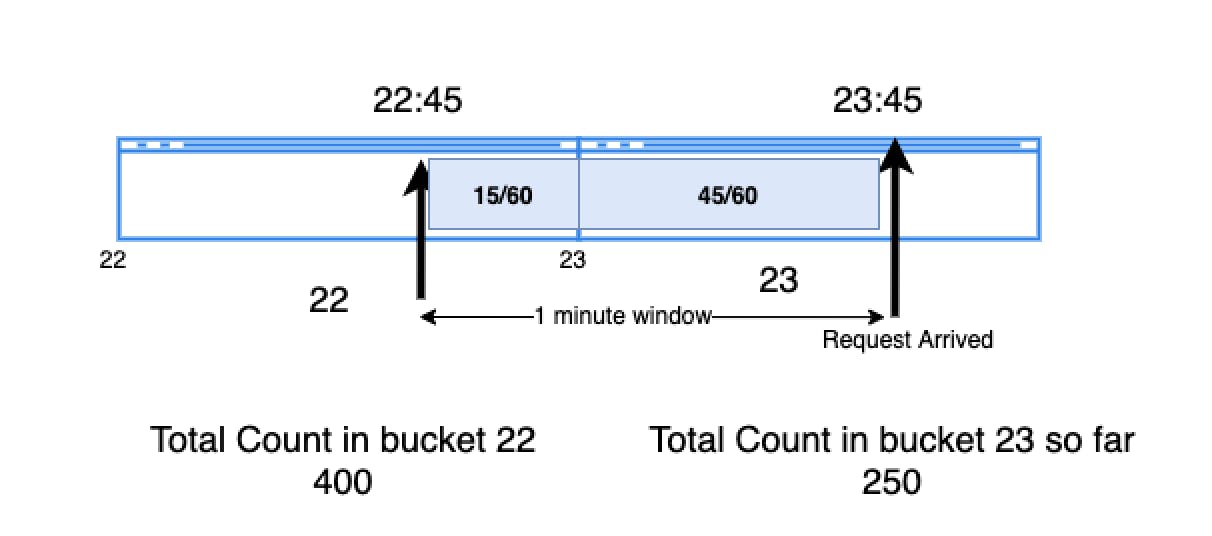

Sliding Window

- It combines the fixed window algorithm’s low processing cost and the sliding log’s improved boundary conditions.

- We keep a list/table of time sorted entries, with each entries being a hybrid and containing the timestamp and the number of requests at that point.

- We keep a sliding window of our time duration and only service requests in our window for the given rate. If the sum of counters is more than the given rate of the limiter, then we take only the first sum of entries equal to the rate limit.

Advantages

- Avoids the starvation problem of the leaky bucket and the bursting problems of fixed window implementations.

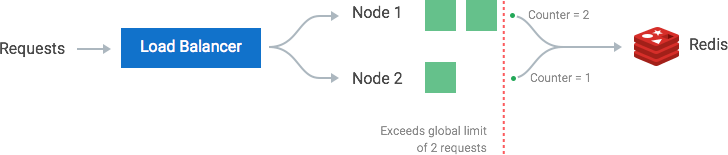

Distributed System Rate Limiting

The above algorithms works very well for single server applications. But the problem becomes very complicated when there is a distributed system involved with multiple nodes or app servers. It becomes more complicated if there are multiple rate limited services distributed across different server regions.

The two broad problems that comes across in these situations are Inconsistency and Race Conditions:

Inconsistency

In case of complex systems with multiple app servers distributed across different regions and having their own rate limiters, we need to define a global rate limiter. A consumer could surpass the global rate limiter individually if it receives a lot of requests in a small time frame. The greater the number of nodes, the more likely the user will exceed the global limit. There are two ways to solve for these problems:

Sticky Session: Have a sticky session in your load balancers so that each consumer gets sent to exactly one node. The downsides include lack of fault tolerance & scaling problems when nodes get overloaded.

Centralized Data Store: Use a centralized data store like Redis/HazelCast to handle counts for each window and consumer. The added latency is a problem, but the flexibility provided makes it an elegant solution.

Race Conditions

Race conditions happen in a get-then-set approach with high concurrency. Each request gets the value of counter then tries to increment it. But by the time that write operation is completed, several other requests have read the value of the counter(which is not correct and also known as dirty-read). Thus a very large number of requests are sent than what was intended. This can be mitigated using locks on the read-write operation, thus making it atomic. But this comes at a performance cost as it becomes a bottleneck causing more latency.

Solution

Both the problem of Inconsistency and race conditions of rate limiting in distributed system can be handled using an in-memory data grid(IMDG) like Hazelcast which can be used as a distributed cache to control total number of incoming API request count. Hazelcast supports entry processing. An entry processor is a function that executes your code on a map entry in an atomic way.

How HazelCast Entry processors solves our problem

Using EntryProcessor, we can read and update the value in one atomic operation. i.e. only one client updates the map.

In a distributed system with multiple data grids , If we perform the process from a client or from a member where the keys do not exist, we effectively perform two network hops for each update: the first to retrieve the data and the second to update the mutated value. An entry processor executes a read and updates upon the member where the data resides. This eliminates the costly network hops and reduces the network round-trip from two to only one.

You can read more our EntryProcessors from here

Congratulations and Thank you on making it to the end! I hope this article helped in clearing your concepts around Rate limiting algorithms and help you decide which one to use based on your use-case.

Feel free to comment, follow for updates!